Supercharged One-step Text-to-Image Diffusion Models with Negative Prompts

ICCV 2025, Honolulu, Hawai'i

Abstract

The escalating demand for real-time image synthesis has driven significant advancements in one-step diffusion models,

which inherently offer expedited generation speeds compared to traditional multi-step methods. However, this enhanced

efficiency is frequently accompanied by a compromise in the controllability of image attributes. While negative prompting,

typically implemented via classifier-free guidance (CFG), has proven effective for fine-grained control in multi-step models,

its application to one-step generators remains largely unaddressed. Due to the lack of iterative refinement, as in multi-step

diffusion, directly applying CFG to one-step generation leads to blending artifacts and diminished output quality. To fill

this gap, we introduce Negative-Away Steer Attention (NASA), an efficient method that

integrates negative prompts into one-step diffusion models. NASA operates within the intermediate representation space by

leveraging cross-attention mechanisms to suppress undesired visual attributes. This strategy avoids the blending artifacts

inherent in output-space guidance and achieves high efficiency, incurring only a minimal 1.89\% increase in FLOPs compared

to the computational doubling of CFG. Furthermore, NASA can be seamlessly integrated into existing timestep distillation

frameworks, enhancing the student's output quality. Experimental results demonstrate that NASA substantially improves

controllability and output quality, achieving an HPSv2 score of 31.21, setting a new state-of-the-art benchmark

for one-step diffusion models.

Quantitative Results

| Method | Anime | Photo | Concept Art | Paintings | Average |

|---|---|---|---|---|---|

| PixArt-α-based backbone | |||||

| PixArt-α [Teacher] | 29.62 | 29.17 | 28.79 | 28.69 | 29.07 |

| YOSO | 28.75 | 28.06 | 28.52 | 28.57 | 28.48 |

| + NASA-I | 28.74 | 28.05 | 28.56 | 28.60 | 28.49 (+0.01) |

| DMD | 29.31 | 28.67 | 28.46 | 28.41 | 28.71 |

| + CFG = 1.5 | 30.02 | 27.07 | 28.36 | 28.07 | 28.38 (-0.33) |

| + CFG = 2.5 | 26.74 | 23.86 | 25.13 | 24.66 | 25.10 (-3.61) |

| + NASA-I | 29.33 | 28.71 | 28.49 | 28.53 | 28.77 (+0.06) |

| SBv2 | 32.19 | 29.09 | 30.39 | 29.69 | 30.34 |

| + NASA-I | 32.60 | 29.58 | 31.09 | 30.65 | 30.98 (+0.64) |

| + NASA-T | 32.33 | 29.26 | 30.75 | 30.10 | 30.61 (+0.27) |

| + NASA-T + CFG = 1.5 | 29.47 | 26.50 | 28.22 | 27.68 | 27.97 (-2.37) |

| + NASA-T + NASA-I | 32.66 | 29.67 | 31.31 | 30.71 | 31.09 (+0.75) |

| + NASA-T + NASA-I (α = 1) | 32.65 | 29.65 | 31.45 | 31.06 | 31.21 (+0.87) |

| Stable Diffusion 1.5-based backbone | |||||

| SDv1.5 [Teacher] | 26.51 | 27.19 | 26.06 | 26.12 | 26.47 |

| InstaFlow-0.9B | 26.10 | 26.62 | 25.92 | 25.95 | 26.15 |

| + NASA-I | 26.15 | 26.68 | 25.93 | 25.98 | 26.19 (+0.04) |

| DMD2 | 26.39 | 27.00 | 25.80 | 25.82 | 26.25 |

| + NASA-I | 26.39 | 27.02 | 25.80 | 25.83 | 26.26 (+0.01) |

| SBv2* | 27.18 | 27.58 | 26.69 | 26.62 | 27.02 |

| + NASA-I | 27.19 | 27.59 | 26.71 | 26.63 | 27.03 (+0.01) |

| + NASA-T | 27.64 | 27.94 | 27.19 | 27.03 | 27.45 (+0.43) |

| + NASA-T + NASA-I | 27.65 | 27.97 | 27.18 | 27.04 | 27.46 (+0.44) |

| Stable Diffusion 2.1-based backbone | |||||

| SDv2.1 [Teacher] | 27.48 | 26.89 | 26.86 | 27.46 | 27.17 |

| SBv2 | 27.25 | 27.62 | 26.86 | 26.77 | 27.13 |

| + NASA-I | 27.44 | 27.84 | 26.91 | 27.02 | 27.30 (+0.17) |

| SBv2* | 27.56 | 27.84 | 26.97 | 27.03 | 27.35 |

| + NASA-I | 27.70 | 28.00 | 27.12 | 27.22 | 27.51 (+0.16) |

| + NASA-T | 28.00 | 28.65 | 27.44 | 27.26 | 27.84 (+0.49) |

| + NASA-T + NASA-I | 28.04 | 28.68 | 27.51 | 27.50 | 27.93 (+0.58) |

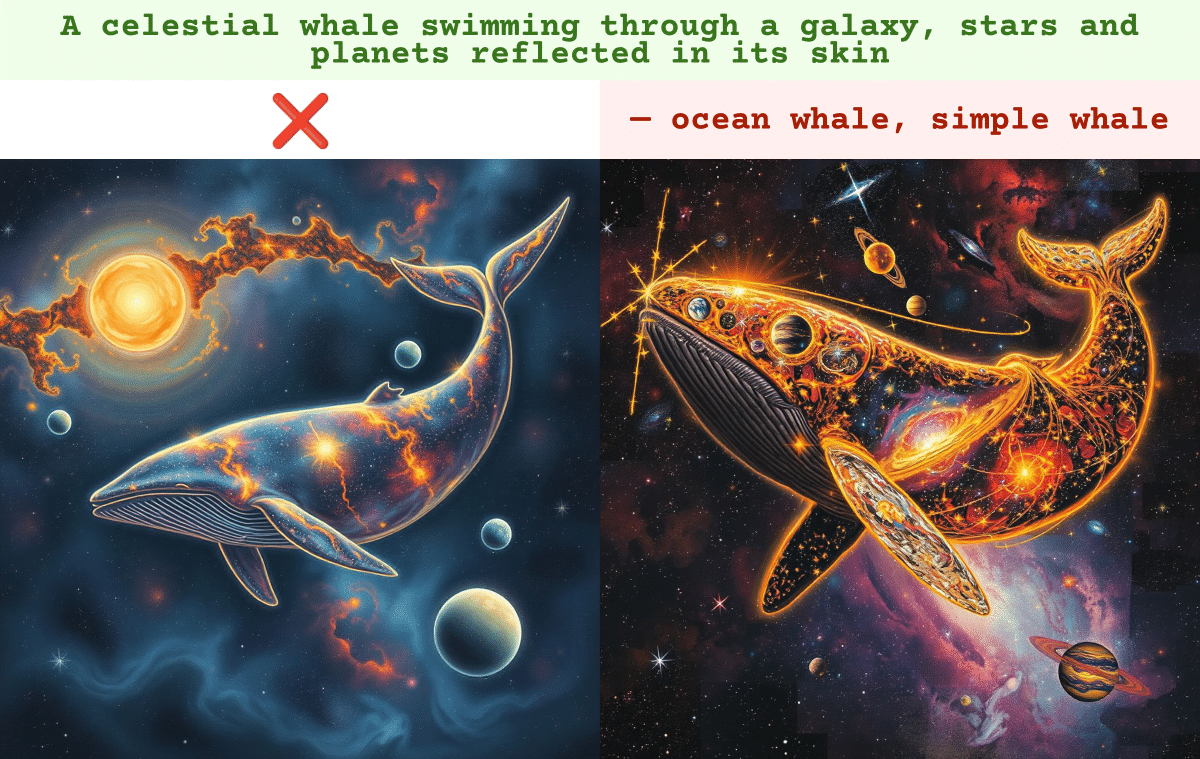

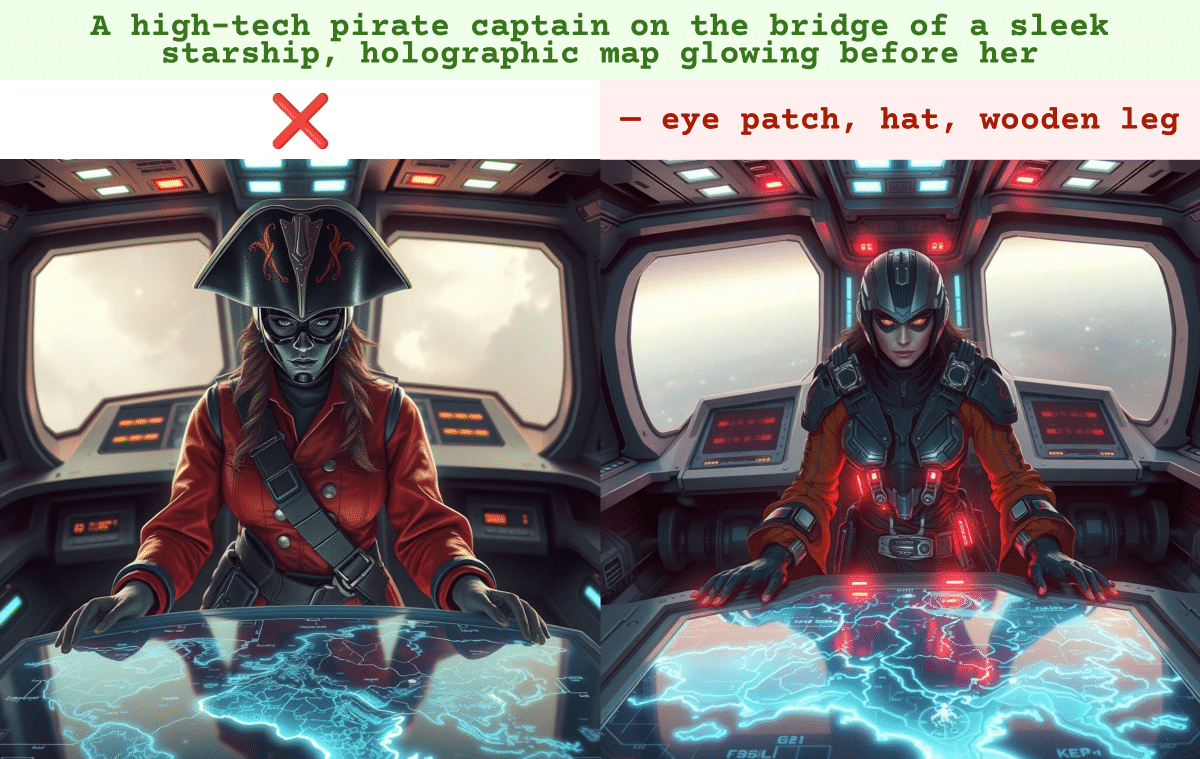

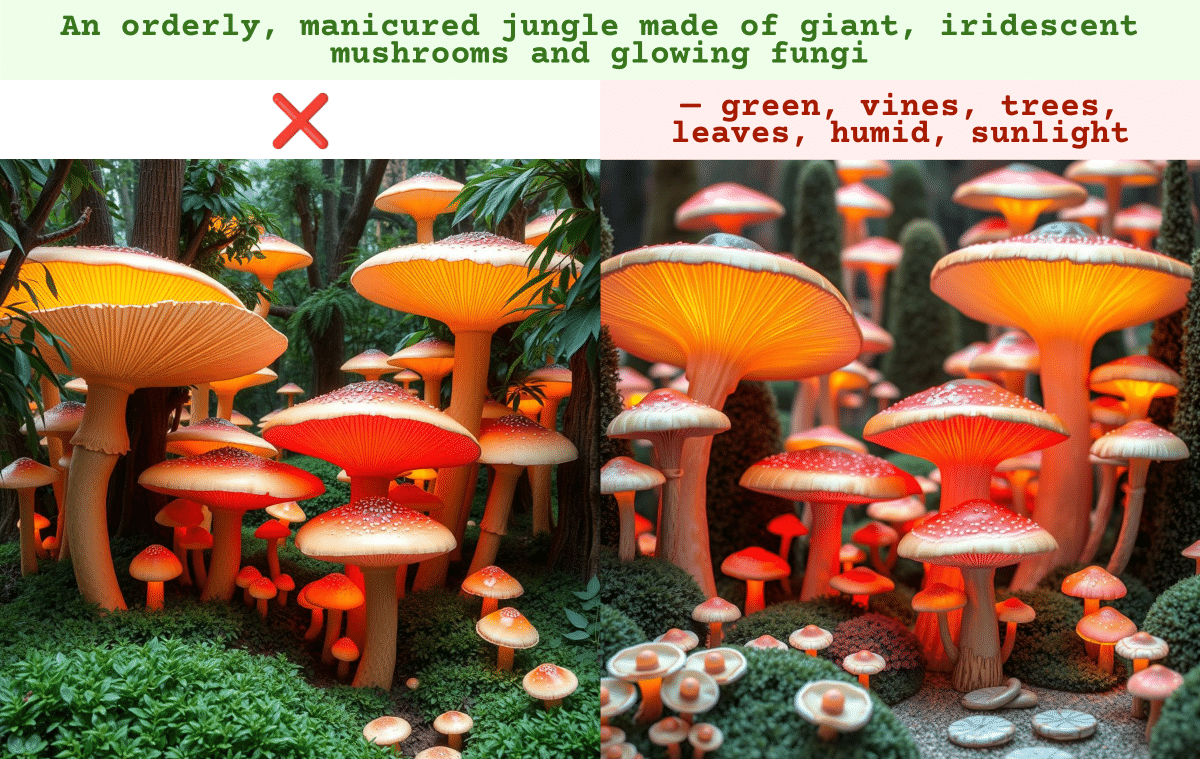

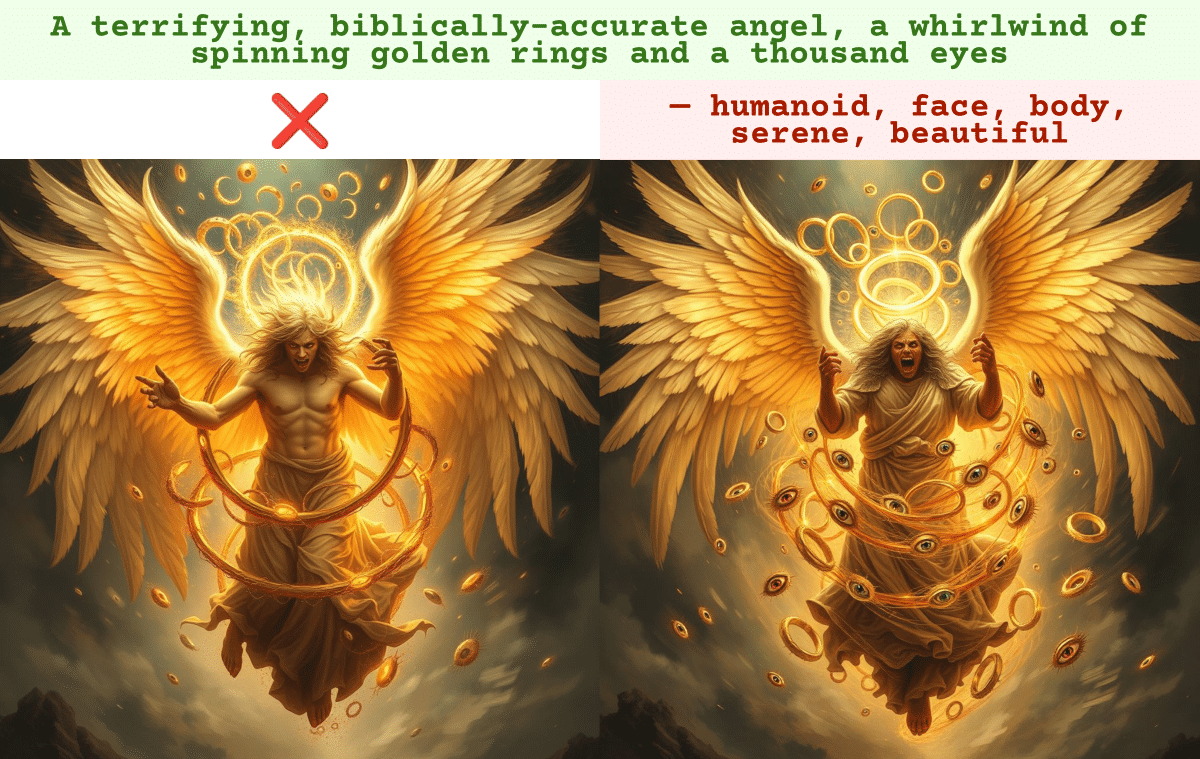

Qualitative Results: NASA-I with SDXL-DMD2

Qualitative Results: NASA-I with FLUX.1-schnell